Problem:

The problem described in this post is often seen in enterprise environment where the customer uses ADAL.Net library to run code like the following from their corpnet machine to authenticate to Azure Active Directory with a Federated account. The network topology in a corporate environment is often complex with multiple security measure put in place (proxy, firewall, router, etc…) to protect the LAN environment from the internet. Unfortunately, these security restrictions can cause problem when a request needs to reach Azure AD internet endpoint (login.microsoftonline.com) and/or ADFS server (or other Federated Server) to complete the authentication handshake.

[code lang=”csharp”]var authenticationContext = new AuthenticationContext(AuthorityUrl);

AuthenticationResult authenticationResult = null;

// Authentication using master user credentials

var credential = new UserPasswordCredential(Username, Password);

authenticationResult = await authenticationContext.AcquireTokenAsync(ResourceUrl, ApplicationId, credential);

return authenticationResult;

[/code]The AcquireTokenAsync call on line 9 above can result in Exception such as the following:

Case 1: Error:

Microsoft.IdentityModel.Clients.ActiveDirectory.AdalServiceException: unknown_error: Unknown error —> System.Threading.Tasks.TaskCanceledException: A task was canceled.

at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

at System.Runtime.CompilerServices.ConfiguredTaskAwaitable`1.ConfiguredTaskAwaiter.GetResult()

at Microsoft.IdentityModel.Clients.ActiveDirectory.HttpClientWrapper.<GetResponseAsync>d__30.MoveNext()

…

at PowerBIEmbedded_AppOwnsData.Controllers.HomeController.<EmbedReport>d__9.MoveNext() in C:\Users\xxx \App Owns Data\PowerBIEmbedded_AppOwnsData\Controllers\HomeController.cs:line 49

ErrorCode: unknown_error

StatusCode: 408

Or

Case 2: Error

System.Net.Http.HttpRequestException: An error occurred while sending the request. —> System.Net.WebException: The underlying connection was closed: An unexpected error occurred on a send. —> System.IO.IOException: Unable to read data from the transport connection: An existing connection was forcibly closed by the remote host. —> System.Net.Sockets.SocketException: An existing connection was forcibly closed by the remote host

at System.Net.Sockets.Socket.EndReceive(IAsyncResult asyncResult)

at System.Net.Sockets.NetworkStream.EndRead(IAsyncResult asyncResult)

..

Analysis:

In these scenario, traditional troubleshooting technique using either ADAL logging or Fiddler does not yield much info since the problem lies deep in the socket connection layer. For the first Exception a quick search for error code 408 discussed here and here tells us that the request is timed out somewhere, but which request? The latter Exception in Case 2 provides a little context in revealing that some remote host is shutting down our request connection. There is quite a bit of work going on for a Federated account happening in the AcquireToken call:

- A home realm discovery request is made to Azure AD to find out where to send the authentication request to

- A request is then made to the Federated Identity Provider endpoint (usually ADFS server) to get the meta data info

- The account is sent to the Federated authorization endpoint for authentication to get a SAML token

- A request is then sent to Azure AD token endpoint with the SAML token to exchange for an OAuth2 token

We need to know how far in the pipeline this authentication mechanism is made before encountering an Exception and what is the URL and IP Address of the endpoint causing the exception. As I mentioned above unfortunately ADAL logging is not detailed enough to shed much info. In the above Case 2 Exception this is what ADAL logging looks like:

[ADAL]-Information 2019-01-04T18:22:04.1279473Z: fca00bbf-d7f2-4ec3-bb76-c5f828aa1854 – AdalLoggerBase.cs: ADAL PCL.Desktop with assembly version ‘4.4.2.0’, file version ‘4.4.2.0’ and informational version ‘4.4.2’ is running…

[ADAL]-Information 2019-01-04T18:22:21.1473375Z: fca00bbf-d7f2-4ec3-bb76-c5f828aa1854 – AdalLoggerBase.cs: === Token Acquisition started:

CacheType: null

Authentication Target: User

, Authority Host: login.microsoftonline.com

[ADAL]-Verbose 2019-01-04T18:22:23.2660794Z: fca00bbf-d7f2-4ec3-bb76-c5f828aa1854 – AdalLoggerBase.cs: Loading from cache.

….

[ADAL]-Information 2019-01-04T18:22:23.3500878Z: fca00bbf-d7f2-4ec3-bb76-c5f828aa1854 – AdalLoggerBase.cs: Checking MSAL cache for user token cache

[ADAL]-Information 2019-01-04T18:22:23.3640906Z: fca00bbf-d7f2-4ec3-bb76-c5f828aa1854 – AdalLoggerBase.cs: Sending request to userrealm endpoint.

[ADAL]-Error 2019-01-04T18:22:25.5532973Z: fca00bbf-d7f2-4ec3-bb76-c5f828aa1854 – AdalLoggerBase.cs: Exception type: System.Net.Http.HttpRequestException

—> Inner Exception Details

Exception type: System.Net.WebException

—> Inner Exception Details

Exception type: System.IO.IOException

—> Inner Exception Details

Exception type: System.Net.Sockets.SocketException

at System.Net.Sockets.Socket.BeginReceive(Byte[] buffer, Int32 offset, Int32 size, SocketFlags socketFlags, AsyncCallback callback, Object state)

All we can tell from the above is that that the Exception happens after we send a home realm discovery request. Not too much to go on from here….

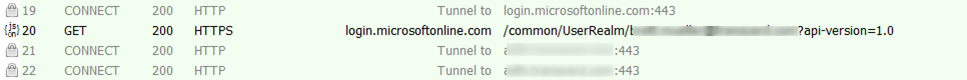

A corresponding for Fiddler trace for Case 2 looks like this:

The Fiddler trace tells us that the home realm discovery request (frame 20) is successful. The next expected request should be made to the ADFS server metadata endpoint (I know this from the tunnel request in frame 21 and 22) and that is missing in this trace. At this point we have an inkling that the ADFS server might have aborted our SSL connection (based on the error message) …

System.Net tracing and Network capture to the rescue….

Instruction to capture system.net tracing and Network capture are below at the end of this post. Both System.Net log and Network capture can provide extremely valuable information about the low-level TCP IP socket connection between the 2 end points. They tell us info about how the socket connection is initialized and established between the 2 IP addresses and if there is any problem with the connection.

Below is snippet of the System.Net log for Case 1:

System.Net Verbose: 0 : [17420] Entering HttpWebRequest#48342929::HttpWebRequest(uri: ‘https://login.microsoftonline.com/common/discovery/instance?api-version=1.1&authorization_endpoint=https://login.microsoftonline.com/<tenant id redacted>/oauth2/authorize’, connectionGroupName: ‘1954745’)

ProcessId=17212

DateTime=2018-08-28T21:36:56.4706363Z

System.Net Verbose: 0 : [17420] Exiting HttpWebRequest#48342929::HttpWebRequest()

ProcessId=17212

DateTime=2018-08-28T21:36:56.4716363Z

System.Net Verbose: 0 : [17420] Entering ServicePoint#51812814::ServicePoint(login.microsoftonline.com:443)

…

System.Net.Sockets Verbose: 0 : [9244] Entering Socket#59411631::InternalEndConnect(ConnectOverlappedAsyncResult#21067700)

ProcessId=17212

DateTime=2018-08-28T21:37:17.5366363Z

System.Net.Sockets Error: 0 : [9244] Socket#59411631::UpdateStatusAfterSocketError() – TimedOut

ProcessId=17212

DateTime=2018-08-28T21:37:17.5376363Z

System.Net.Sockets Error: 0 : [9244] Exception in Socket#59411631::InternalEndConnect – A connection attempt failed because the connected party did not properly respond after a period of time, or established connection failed because connected host has failed to respond <IP Address redacted>.

ProcessId=17212

DateTime=2018-08-28T21:37:17.5386363Z

..

System.Net Error: 0 : [15916] Exception in HttpWebRequest#48342929:: – The request was aborted: The request was canceled..

ProcessId=17212

DateTime=2018-08-28T21:37:26.5476363Z

System.Net Verbose: 0 : [15916] Entering HttpWebRequest#48342929::EndGetResponse()

ProcessId=17212

DateTime=2018-08-28T21:37:26.5516363Z

System.Net Error: 0 : [15916] Exception in HttpWebRequest#48342929::EndGetResponse – The request was aborted: The request was canceled..

ProcessId=17212

DateTime=2018-08-28T21:37:26.5526363Z

System.Net Information: 0 : [15916] ServicePoint#51812814::CloseConnectionGroupInternal(1954745)

ProcessId=17212

DateTime=2018-08-28T21:37:26.5566363Z

We can tell from the above log that the request fails very early in the home realm discovery stage. The client sends a request to Azure AD (login.microsoftonline.com) but that request is timed out We tried running the same code in an Azure VM outside of the customer’s environment and that worked fine. This is enough evidence to conclude that the Azure AD endpoint is reachable and something within the customer’s environment is blocking our request.

Below is the snippet of the System.Net for Case 2:

System.Net Verbose: 0 : [32820] Entering HttpWebRequest#59312528::HttpWebRequest(uri: ‘https://<ADFS URL redacted>/adfs/services/trust/mex’, connectionGroupName: ‘35903099’)

ProcessId=40964

DateTime=2019-01-04T18:22:24.7492510Z

…

System.Net Information: 0 : [41764] Connection#1680021 – Created connection from 10.100.##.##:65057 to 10.100.##.##:443.

ProcessId=40964

DateTime=2019-01-04T18:22:25.1833208Z

…

System.Net Information: 0 : [41764] HttpWebRequest#59312528 – Request: GET /adfs/services/trust/mex HTTP/1.1

ProcessId=40964

DateTime=2019-01-04T18:22:25.2003015Z

System.Net Information: 0 : [41764] ConnectStream#62301924 – Sending headers

…

System.Net.Sockets Error: 0 : [36156] Exception in Socket#28560362::BeginReceive – An existing connection was forcibly closed by the remote host.

ProcessId=40964

DateTime=2019-01-04T18:22:25.2898109Z

System.Net.Sockets Verbose: 0 : [36156] Exiting Socket#28560362::BeginReceive()

ProcessId=40964

DateTime=2019-01-04T18:22:25.3063138Z

System.Net.Sockets Verbose: 0 : [36156] Entering Socket#28560362::Dispose()

ProcessId=40964

DateTime=2019-01-04T18:22:25.3198160Z

System.Net Error: 0 : [36156] Exception in HttpWebRequest#59312528:: – The underlying connection was closed: An unexpected error occurred on a send..

ProcessId=40964

DateTime=2019-01-04T18:22:25.3558191Z.

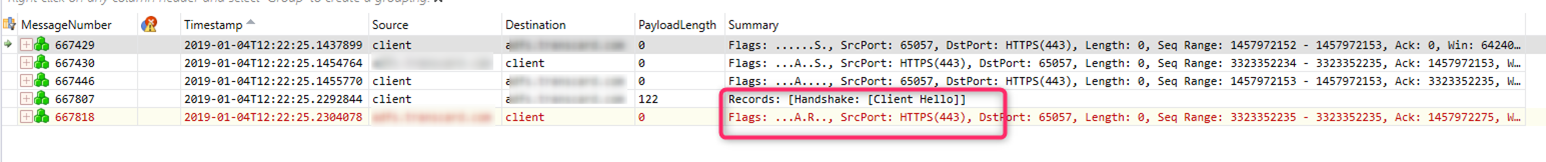

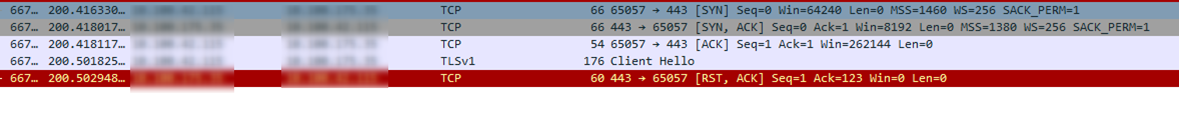

The above log tells us that we are failing to connect to the ADFS Server metadata endpoint. The server shuts down our request. It also tells us the IP address and port number of both the local machine and the remote ADFS server and we can use this info as a filter when looking at the corresponding network trace below in

Filter used: IPv4.Address == xxx and ipv4.Address == yyy and TCP.Port == 443 and tcp.port == 65057

Or in Wireshark:

From the network trace, after the client completes TCP IP 3-way handshake with the ADFS server it sends a Client Hello message to start an SSL connection to the ADFS server. That SSL handshake fails when the server sends a reset command (RST) to close the connection. If the SSL connection is successful, we should see the server sending back a Server Hello message. In this case it’s the customer’s Firewall that blocks the connection.

How to capture a System.Net log:

How to capture a network log:

There are multiple ways to do this. I use netsh command to do this:

(run the following from an Admin command prompt):

- netsh trace start capture=yes persistent=yes traceFile=C:\temp\trace\trace001.etl // start tracing

- Repro the issue

-

netsh trace stop // stop tracing

Also see the following blog for more info:

https://blogs.msdn.microsoft.com/benjaminperkins/2018/03/09/capture-a-netsh-network-trace/

Summary:

I hope the above information is useful to help you diagnose Exceptions like this when using ADAL or any other Authentication library to Azure AD.